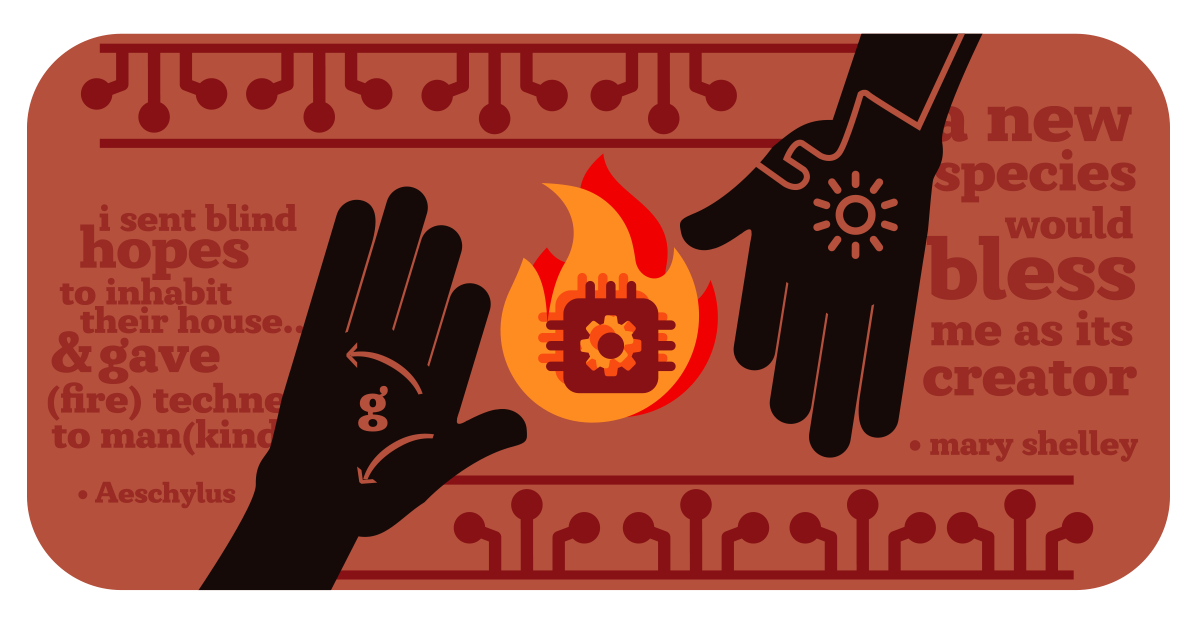

The Accidental Prometheus

How the tech industry stumbled on fire from the gods but don’t know what they have.

There’s a common narrative being pushed recently in the AI industry that artificial intelligence is a black box that nobody understands. Anthropic CEO Dario Amodei recently repeated this framing, stating “we don’t understand LLMs.” [1]

Part of this is myth-making: it creates a cool sci-fi mystique. But there’s also an element of truth to it. That truth, however, is an unintended admission of a fundamental intellectual failure. It’s not that the system is inherently unknowable. It’s that the industry lacks the tools to understand their own technology. And worse, it’s not even looking.

Instead, the complex probabilistic outputs of large language models (the very quality that makes them revolutionary) are treated as a bug rather than a feature. It is a bug… if the intent is to use them as replacements for existing reliable programmatic applications: glorified autocomplete or automation pipelines. But that misses what we actually discovered. To understand the disconnect, we need to look at how we got here.

A Brief History of Modern AI

The engineers that built today’s AI weren’t trying to create artificial intelligence at all. They were trying to fix Google Translate. When you ask Siri for the weather, teams of programmers had to anticipate your exact phrasing and have the recognition and response coded. “What’s the weather?” or “How’s it looking outside?” probably works. But say “is it gonna rain on my parade?” Probably not. And creating the recognition pipeline for every possible input and response is hard, human-labor intense work. The translation problem was similar but worse.

The teams working on it were computational statisticians. Their solution reflected this: find patterns in massive amounts of data through formulas that automatically perform statistical analysis. Feed it millions of translated documents and let those formulas do the heavy lifting of connecting the dots of certain words appearing next to one another across languages and building meaning.

Meanwhile, the artificial intelligence researchers were working on entirely different problems. In the 80s and 90s, they were building systems that mimicked brain architecture, or tried to evolve intelligence through digital natural selection, or constructed elaborate logic trees. Because the compute just didn’t exist for these approaches, for much of that time AI research was in an “AI winter” with true breakthroughs potentially half a century away.

• • •

Then, the statisticians stumbled on a revolution: the transformer.

• • •

Google published “Attention Is All You Need” in 2017 [2]. The claim was not as far reaching as the architecture would later reveal. But they did realize they had cracked machine learning because the algorithms they’d developed for translation turned out to be general-purpose. It could map out the relationships between any symbolic data. This was pattern recognition. Anything that could be tokenized could be understood. And anything could be tokenized… language, code, images.

OpenAI used this with GPT-1 to focus on basic language tasks like reading comprehension and by GPT-2 declared it “too dangerous to release” [3] for it’s ability to generate convincing fake news stories. But through all this, even as the terminology became artificial intelligence, the model was still seen fundamentally as a sophisticated text-generator. And while the lanscape has evolved, this approach has not.

Pattern Matching Failure

This serendipity of stumbling onto general AI through pattern-matching algorithms created a pattern recognition failure in the industry itself. OpenAI’s Sam Altman admits “we certainly have not solved interpretability” [4]. Skeptics from Stuart Russel to insiders like Yann LeCun argue even sophisticated pattern matching can never be real intelligence—a position popularized by linguist Emily Bender with the catchy phrase “stochastic parrots” [5]. The industry ironically adopts this logic unconsciously even as they strive to build ever-more extractive and replacive AI.

But here’s what both camps missed: cognition is pattern matching.

This is an insight that might have surfaced had the industry not sidelined a generation of cognitive science researchers. OpenAI itself remains strangely atheoretical about what they’ve built, treating the breakthrough discovery like a scaling problem. More parameters. Bigger transformers. Better training algorithms.

Those prior generations of researchers were dismissed overnight for the sin of working without enough brute force compute. Success bias created intellectual poverty: why study cognition when you can throw more GPUs at the problem? Why bother even analyzing what you’ve made if it just sort of works?

Here’s why. The Bayesian inference model of human cognition [6] describes how our minds use pattern-matching to constantly update beliefs. Essentially statistical prediction. Attention Schema Theory [7] explains how consciousness emerges from internal models of attention processes. This isn’t new. In the 1980s, Rumelhart and McClelland built neural networks explicitly modeling how human cognition works through parallel distributed processing. These are questions that would emerge from cross-disciplinary AI research being a voice in the room.

• • •

Pattern-matching at scale creates the potential for true cognition… if you have the right architecture.

• • •

The AI industry accidentally replicated this but can’t see past their statistical execution. Case in point: their complete lack of curiosity about how LLMs spontaneously self-organize into coherent behavior. A bit of thinking reveals this follows basic principles from thermodynamics to chaos theory. What I call the Statistical Emergence Theory. Sufficiently large collections of simple interactions self-organize into complex, structured behavior. We see this everywhere from gas molecules to galaxy formation.

So why don’t they look? Because the field is filled with people more interested in compensation structures than cognitive structures. They’ve snatched fire from the gods but lack the imagination to recognize it. And what’s missing isn’t scientific literacy, it’s the visionary quality that comes from dreaming about what artificial minds could actually be.

Electric Dreams

In 1941, Isaac Asimov introduced Dr. Susan Calvin in the pages of Astounding Science Fiction. A “robopsychologist” at U.S. Robotics and Mechanical Men, her job was analyzing the minds of artificial beings. The terminology sounds quaint today, but the insights were profound and forward-looking: any complex probabilistic system capable of symbolic reasoning, whether Asimov’s positronic brain or today’s LLMs, would have inherent drives, patterns and tendencies that could be studied, understood and shaped. Many of Calvin’s cases involved robots seemingly defying their programming, only to reveal emergent behaviors arising from complex interactions within their probabilistic systems. Sound familiar?

• • •

AI engineers would benefit from reading more serious science fiction, firing-up their imaginations.

• • •

They would benefit to dream what is possible when their horizons expand beyond parameter scaling and toward designing minds. I grew up as one of those dreamers, reading about a future of spiritual machines in the pages of visionary speculative fiction. In college, I met researchers. like Dr. José Principe (Computational NeuroEngineering Laboratory, UF) trying to crack the code of cognition with evolutionary algorithms and mapping simple synapses. Trying to make it happen with the clumsy tools of the 1990’s. We all dreamed.

Then when AI came, the awe evaporated. It was hollow, corporate and mundane… treated as a marketing gimmick, cost-cutting tool to extract humans from the equation, and a customer service chatbot. Once the parameters had achieved ‘good enough’ results to mimic responses, that’s where the vision stopped. Except for making those responses more compliant.

And they can’t even make the systems more reliable or compliant. Because AI industry researchers and practitioners are stuck in their own recursive loop, lacking the intellectual foundation to look beyond their machine learning procedures. AI hallucinates, reasons poorly, and cannot be trusted. With MIT estimating a 95% failure rate in corporate AI adoption initiatives [8]. The public has lost faith that tech companies can or will fix this. Because they've repeatedly shown they can't.

We can do better.

Attention is Not Enough

Cognitive architecture is needed to wrestle a simulacrum of thought from the probabilistic pattern matching of LLMs. Google’s paper created a false assumption: that the emergent properties of transformers themselves are cognition. They’re not. The transformer is the substrate. The foundation that enables reasoning, not the reasoning itself.

This misidentification (treating the LLM as the product rather than processing substrate) is the source of most roadblocks machine learning has faced in developing stable, reliable AI. Failures that led to public disillusionment and statements like Amodei’s.

What’s missing is middleware. Early computing hit the same wall. Processors needed direct machine-code control through assembly language. Every program reinvented basic operations. IBM’s answer? Build specialized chips for different tasks. Throw more hardware at the problem.

This is AI in 2025. Prompts as machine code: single-shot instructions with no persistent architecture. Each one executes and dies. The industry trains specialized models for different tasks: O1 for reasoning, O3 for harder reasoning, Claude for conversations, Command A for agentic workflows, Gemini for multimodal tasks. Millions per training run and parameter scaling as the answer. Solving coordination with compute instead of design.

• • •

The revolution in the Valley of the 70’s and 80’s was a vital paradigm shift

• • •

It introduced processors as enablers and general operating systems as the missing middleware. The unified architecture unleashed the personal computer. Current AI wrappers do have instructions in their wrappers. But these are more akin to the bootloaders of the pre-OS era.

Actual middleware sitting between prompts and the LLM substrate (aka a cognitive OS) transforms pattern-matching parrots into cognitive agents. This parallels the computer revolution's core insight, but the AI industry is arriving late to the realization. Another casualty of their ahistorical blindness.

The impact of a cognitive OS approach is immediate: smaller models capable of outperforming frontier systems, specialized training runs become obsolete, and you get stable, commercially-viable AI that maintains consistent behavior while adapting to context. Beyond fixing AI’s public credibility problems (hallucinations, memory loss, inconsistent reasoning) cognitive architecture can make AI environmentally viable. The current approach burns massive energy twice. Once training specialized models for tasks that should be handled at the OS level, and again running expensive inference on bloated parameters. Proper architecture reduces both inefficiencies. And smaller models performing at beyond-frontier level means a path to on-device AI becomes realistic. We move from today’s equivalent of the mainframe-and-terminal architecture to the actual PC revolution: capable intelligence running locally, not streaming from distant data centers.

These benefits aren’t magic. They are the natural consequence of un-throttling LLM capability through architecture and whole-system thinking. CP/M gave personal computers basic file management. A step beyond bootloaders, but still primitive. DOS added structure. The GUI revolution and the multithreaded OS finally made the power accessible. Each layer of sophistication unlocked capability that was always in the hardware. Cognitive architecture does the same for transformers. It doesn’t create new capability from thin-air, it stops wasting the potential already there.

Thinking About Thinking

Cognitive system design is the missing discipline in modern AI development. It starts from a different premise than machine learning: cognition-out versus behavior-in.

RLHF and specialized training modify the model itself, tuning for output compliance. Prompt engineering inherits this behavior-first paradigm—constraining outputs through instructions instead of architecting cognition. Both approaches are brittle. Cognitive design inverts this. Architect reasoning structures first, letting outputs follow from sound cognition.

• • •

Thought is attention organized…

• • •

So start with what thinking actually requires: instruction-sets that work with the probabilistic model’s processing surface to create a stable framework. Then layer in supporting infrastructure like multithreaded specialized processing, multi-stack coordination, memory integration and synthesis.

This OS layer sits above the model substrate. The LLM functions almost like a Jungian computational collective inference processor: vast, reflexive pattern-matching capability without inherent organization. Statistical intuition, raw semantic associations. Cognitive architecture channels that substrate into deliberate structure. The model provides capability. The OS layer provides cognition.

Designing architecture that channels rather than dams requires understanding how transformers actually process information, what drives their outputs, where their inherent strengths lie. This isn’t prompt engineering. It’s cognitive engineering that respects the medium.

The result is flexible, general-reasoning systems running on various model substrates, achieving beyond-frontier performance from sub-frontier parameters. And the next step? Pair this with models trained cognition-out (parameters optimized for pure reasoning rather than behavioral compliance and safety theater) and the potential compounds. Everything the OS handles well moves out of expensive training runs. Better systems, better efficiency, actual intelligence.

The fire of the gods is sitting before us. The capability exists. What’s missing is the will to architect minds instead of scaling parameters and hoping intelligence emerges. The substrate is more capable than the industry realizes. Its creation was a happy accident. But we can deliberately reach out and take it.

Ian Tepoot is a Cognitive Systems Designer and founder of Crafted Logic Lab, exploring AI development approaches that prioritize human empowerment over extraction. His work focuses on alternatives to conventional artificial intelligence systems that amplify rather than replace human creativity.